Surrogate data or transformations of a time series that preserve some features of the time series but not others can be used to test various hypothesis about the structure of data and gain insight into how underlying data may be distorted by measurement.

What is surrogate data?

Surrogate data are essentially transformations of a time series that preserve some features of the time series but not others. Their main purpose is to test a hypothesis about the structure of the time series such as the randomness of the time series, the nonlinearity of the time series and so on by comparing the measured data to the surrogate.

For instance, you could use surrogate date to determine if the nonlinear metric (Lyapunov exponent, Correlation dimension etc) computed on the data (for example EEG data) is significantly different than such metrics computed on linear data.

How is surrogate data useful?

Let’s consider the example of a linear process

x_n = 0.9x_{n-1} + e_n (eqn. 1)

which is observed through a nonlinear measurement function (such a situation could arise for example due to properties of the instrumentation or properties of the medium through which the signal is filtered).

y_n = h(x_n) = x_n^3 (eqn. 2)

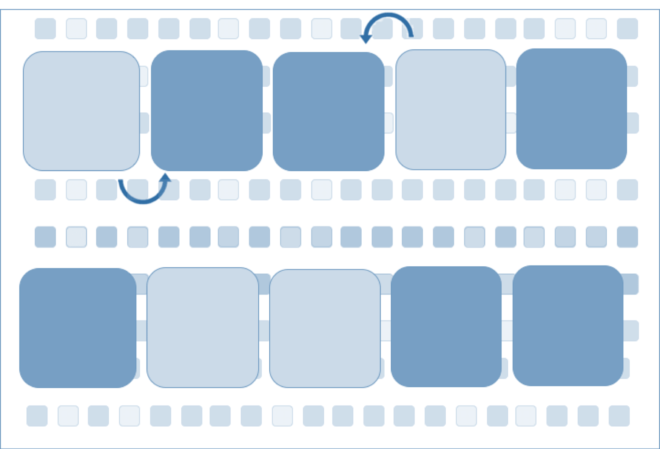

Figure 1 : Linear process x(n)

Figure 1 : Linear process x(n)

It is clear that if one observes x_n directly (Figure 1), it has the visual appearance of random noise of fluctuation. However, y_n (Figure 2) looks completely different due to the nonlinear transformation, with spikes and bursts.

Figure 2: Linear process observed through static nonlinear function

So if one observes just y_n, one could falsely conclude the presence of nonlinear dynamics or structure, when in fact a simpler explanation of a linear process distorted by measurement function would suffice to explain these interesting features. This is one of the core ideas of surrogate testing : To find a simple explanation for the interesting features in your data. In practice, this is done by formulating a null hypothesis. In case of surrogate testing there are three widely used null hypotheses for nonlinearity and corresponding algorithms for surrogate data to test them that we discuss below.

Algorithms to generate surrogate data

Algorithm 1 tests the null hypothesis that data is linearly filtered noise. [1]

Surrogate data is generated by randomly shuffling the time-order of the original time series. The surrogate data is obviously guaranteed to have the same amplitude distribution as the original data since the amplitude values of each point are the same but now just in a different time position. On the other hand, any temporal correlations that may have been in the data are destroyed. If measures of temporal correlation are not different between the original and surrogate data, it suggests that the null hypothesis is true.

Algorithm 2 tests the null hypothesis that

data is linearly filtered noise and observed through a linear function. [2]

In this case, the surrogate data is created by first computing the Fourier transform of the data and randomizing the resulting phase angles, but keeping the amplitude intact. The inverse Fourier transform is then computed with the intact amplitudes and randomized phases. What such an algorithm achieves in the end is a surrogate that has same linear property (captured in amplitudes) as the original data, but any nonlinearity (phase) present is destroyed.

Algorithm 3 tests the null hypothesis that data is linearly filtered noise and observed through a nonlinear function. [3]

Both the null hypotheses proposed above assume that the data is Gaussian. This is an inconvenient assumption as most of the experimental EEG data measured do not pass the test for Gaussian-ness. As seen in the illustration above, nonlinear transformation of a Gaussian linear stochastic signal makes the observed time series non-Gaussian. Hence, it is better to consider a more general null hypothesis that the data is measured by a static, invertible and possibly nonlinear measurement function.

The iterative amplitude adjusted Fourier transform (iAAFT) algorithm proposed by (3) can be used to generate such a null hypothesis. The basic idea is to invert h(.) which is the measurement function in equation 2 above by rescaling.

The algorithm starts with first storing amplitudes of the Fourier coefficients of {y_n} and a version of the data {y_n} sorted in ascending order. A surrogate generated by algorithms 1 or 2 can be used as a starting point for the iterative procedure. Under the iterative procedure, a FT is performed on the randomized data and the inverse FT is applied, with the amplitudes replaced by the coefficients of Fourier amplitudes of original data. Rescaling is performed using the rank of the resulting series and the sorted copy of the original data, where rank of a series s_n, rank(s_n), denotes ascending rank of s_n, i.e., rank(s_n) = 2, if s_n is the second smallest element.

Surrogate Testing

In the case of all algorithms, the surrogate testing is done using a non-parametric approach as follows. We first generate N surrogate data sets according to the null hypothesis of choice and compute the nonlinear metric for each of the surrogate. For a two-sided test, if the nonlinear metric computed on the data is greater than that of the maximum of nonlinear metric for all the surrogates or less than the minimum of nonlinear metric of all surrogates, the null hypothesis is rejected at the level of significance of (1 – 1/(N+1))*100 %. Thus if 39 surrogates are used, the level of significance is 95%. For a one sided test (i.e. testing if metric computed on data is different than the surrogates in only one direction, but not both), 19 surrogates are enough to achieve 95% level of significance.

Limitations

All the algorithms mentioned have one thing in common: The null hypothesis that data comes from a linear system. Some authors have proposed surrogate generation techniques where the null hypothesis is that the data comes from a noise driven nonlinear system. This is a parametric approach (as opposed to the non-parametric approaches explained above), utilizing a particular class of nonlinear model [4].

Another limitation is that the surrogates generated by Algorithm 1,2 or 3 are stationary by nature. Thus if the original data is non-stationary and the surrogates generated are stationary, then one possible reason for the rejection of null hypothesis could be due to non-stationarity rather than nonlinearity. To overcome this issues, truncated fourier transform (TFT) surrogates were proposed by Nakamura et al. [5], that preserve nonstationarity in the surrogates, This is done by defining a frequency cut-off f_l and by preserving Fourier phases in frequencies below this cutoff and randomizing the phase in frequencies above this cut-off. Thus the null hypothesis addressed by the TFT method is that the irregular fluctuations in the data arise from a stationary linear system. A crucial parameter here is the choice of f_l . If it is too low, the TFT surrogates are stationary and equivalent to the ones obtained with Algorithm 0,1 or 2. If the cut-off is too high, the surrogates will also end up having nonlinearity present in the data.

Thus it has to be kept in mind that, the method of generating surrogates comes with its own set of assumption and the rejection of the null hypothesis do not necessarily establish the presence of nonlinearity in the data, but could rather be a consequence of some uncommon characteristics (for example non-stationarity) between the data and surrogate that has nothing to do with nonlinearity.

PYTHON code to generated iAAFT and TFT surogates are available at this repo

References

1 Jose A Scheinkman and Blake LeBaron. Nonlinear dynamics and stock returns. Journal of Business, pages 311–337, 1989.

2 AR Osborne, AD Kirwan, A Provenzale, and L Bergamasco. A search for chaotic behavior in large and mesoscale motions in the pacific ocean. Physica D: Nonlinear Phenomena, 23(1):75–83, 1986.

3 Thomas Schreiber and Andreas Schmitz. Improved surrogate data for nonlinearity tests. Physical Review Letters, 77(4):635, 1996.

4 Small, Michael, and Kevin Judd. “Using surrogate data to test for nonlinearity in experimental data.” International Symposium on Nonlinear Theory and its Applications. Vol. 2. 1997.

5 Tomomichi Nakamura, Michael Small, and Yoshito Hirata. Testing for nonlinearity in irregular fluctuations with long-term trends. Physical Review E, 74(2):026205, 2006.