Random walk or diffusion models have been successful at predicting human decision making and guiding experiments. Here’s why.

In my last post I described two successful theories. Another successful theory is that of diffusion or random walk models that are used to explain human behavior.

Diffusion Models for Simple Decisions

These diffusion models (a.k.a., random walk models) have been developed to explain the probability that a person will say ‘yes’ or ‘no’ in binary decision tasks. In addition, the models explain the time it takes to make a yes-no decision. There are two mathematical formulations—discrete and continuous stochastic equations. However, the one you choose does not have much impact on the explanatory power of the theory. They have a small number of parameters: three for random walk (Link 1992); four for diffusion model (Ratcliff, 1978).

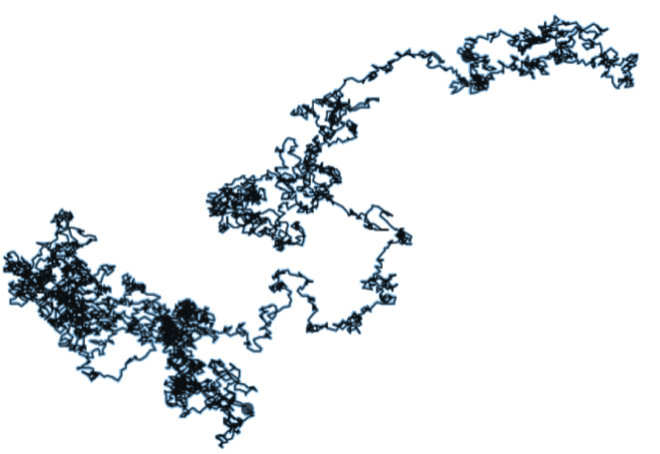

Figure: Examples of a diffusion process to reach a binary decision. For example, the upper boundary may be a ‘yes’ decision and the lower boundary may be a ‘no’ decision. In the picture we see traces of the process of making six decisions. Decision is made by accumulating evidence until a boundary has been reached. The model has a random component because at each time step a ‘coin’ is tossed on whether the random walk will move towards the upper or lower boundary. But also, there is a deterministic component because the walk is made drift towards one of the boundaries. The three important parameters of the model are 1) the distance of boundaries from zero, 2) the rate of the drift, 3) the position at which the walk starts; the walk does not have to start from zero but can start from some other position. This starting position is called bias.

Major Achievements of Diffusion/Random Walk Models

What is the greatest achievement of these random walk/diffusion models in decision making? It is that they guided the discovery of neurons responsible for decision making in e.g., lateral intraparietal cortex (Hanks et al. 2006; see also Mulder et al. 2012). Probably, no connectionist model can brag about a similar achievement. It may not be as significant as the discovery of genes predicted by the theory of evolution, or the discovery of ion channels predicted by the Hodgkin-Huxley model, but it is still more successful than most connectionist computational models.

The model does not describe any specific physiological process (e.g., membrane potential or a threshold reached to generated action potential). Rather the model describes an abstract process of decision making under stochastic conditions. In a way, the model reduces the complexity of physiological processes into only a few parameters and a simple evidence-accumulation mechanism. The model offers an elegant theoretical description of a process for which it is otherwise unknown how it works in physiological detail.

The Elements of Success

What made it successful? Like the examples I described previously it is again about how they treat their assumptions/parameters. The few parameters that the diffusion models have are all interpretable and meaningful. For example, the drift rate (see Figure) is interpreted as a human faculty—a gradual evidence accumulation process representing a tendency towards a certain response. This is similar to the values of membrane permeability being linked to ion channels in the Hodgkin-Huxley model. These fitted values can be carried over to new experiments of a similar type and that way the values become empirically testable and their ability to explain human or animal decision process can be established. No parameter of the model is let linger in the air, i.e., no parameter helps fit the model without offering an empirically testable prediction.. In general, these models are excellent in fitting empirical data, despite the small number of parameters (e.g., Wagenmakers, 2009) and beat connectionist models in that task (Ratcliff et al., 1999). A large palette of experiments involving binary decisions has been fitted with them, producing a desirable domino effect that I discussed before. In my own work, I was able to expand the random walk theory to a task in which one is not allowed to respond immediately but has to wait for a permission signal (Nikolić & Gronlund, 2002). I managed to do that without creating a conflict with other explanations.

Any lessons learned?

So what is common among these theories that could be described as successful? What is it in their core that made scientific progress possible? I think the key is in finding the perfect separation line between what should be kept unchangeable i.e., parts of the theory that hold for all possible applications of the theory on one side (the fixed parts), and the parts that are allowed to be changed in each individual application (the flexible parts). The fixed parts are referred to as assumptions (see also axioms, postulates) and are usually described by equations but can in principle still contain numbers (a famous example in physics is Planck constant). The flexible parts are usually referred to as parameters, but nothing in principle prevents them to also be described by flexible equations.

A much more important aspect is how each of these parts contributes to the explanatory power of the theory: The fixed parts should be formulated such that they already resolve all the possible conflicts. The choices of free parts should be unable to create conflicts because the fixed parts should have been already established so. This means that the free parts should only have a limited space to work with. Their space should be moulded through the wisdom of the fixed parts. Everything that happens in that space should be meaningful, empirically testable and have the potential to refute the fixed part of the theory.

All the pressure is thus, on making the fixed part right. A good theory is the one that has formulated the fixed part such that it works well with empirical data. Connectionism (a.k.a., neural network models) does not have strong enough fixed part. The amount of freedom each new study has is too large. Explanations created in a connectionist manner are easy to create. But then those explanations also easily lead to conflicts with other connectionist explanations. This results in a proliferation of theoretical proposals. Unfortunately, these are by and large mutually exclusive proposals. Basically, the field as a whole does not have much of shared axioms. Consequently, there is no really a theory to talk of.

see related post The Crisis of Computational Neuroscience

To see the entire blog series by Danko Nikolić click here.

References:

Hanks, T. D., Ditterich, J., & Shadlen, M. N. (2006). Microstimulation of macaque area LIP affects decision-making in a motion discrimination task. Nature neuroscience, 9(5), 682.

Link, S. W. (1992). The wave theory of difference and similarity. Psychology Press.

Mulder, M. J., Wagenmakers, E. J., Ratcliff, R., Boekel, W., & Forstmann, B. U. (2012). Bias in the brain: a diffusion model analysis of prior probability and potential payoff. Journal of Neuroscience, 32(7), 2335-2343.

Nikolić, D., & Gronlund, S. D. (2002). A tandem random walk model of the SAT paradigm: Response times and accumulation of evidence. British Journal of Mathematical and Statistical Psychology, 55(2), 263-288.

Ratcliff, R. (1978). A theory of memory retrieval. Psychological review, 85(2), 59.

Ratcliff, R., Van Zandt, T., & McKoon, G. (1999). Connectionist and diffusion models of reaction time. Psychological review, 106(2), 261.

Wagenmakers, E. J. (2009). Methodological and empirical developments for the Ratcliff diffusion model of response times and accuracy. European Journal of Cognitive Psychology, 21(5), 641-671.

Very informative.