Afterimages are color inverted perceptual images that persist after fixating on an image for a long period of time. The perceptual mechanisms of afterimages suggest fast adaptive mechanisms at play.

In my previous posts I introduced the idea of fast adaptive mechanisms playing a critical role in brain functions. This started with my quest to find an alternative for connectionism, which I argued has problems. I argued that fast adaptive mechanisms can supply means for fast information routing in the brain, and I also suggested that neurons in the brain can learn how to quickly adapt.

The next question is: Can we use these ideas to specify empirically testable hypotheses? And can we then conduct experiments to seek evidence supporting (or not supporting) those ideas? I would like to describe here an experiment that we have recently conducted, and which was directly motivated by the idea that neurons rapidly adapt to adjust the properties of our biological neural networks.

The experiment that I am about to describe was behavioral. This means that we did not directly measure the adaptations of neurons. Rather, we assessed those adaptations indirectly by asking participants about their internal perception-like experiences of afterimages. We then explored whether the dynamics of change of afterimages could be such that it is best explained by fast adaptive mechanisms of neurons.

Afterimages

A textbook way of inducing an afterimage is to fixate a drawing for say 10 seconds or longer, and then look at a blank white background. What one sees is an exact replica of what has been viewed just recently with the exception that all the colors are inverted: red becomes green, yellow becomes blue, white becomes black, and so on. A secret to building a good afterimage, is to hold your eyes still during the viewing of the original image—you need to be patient during induction of afterimage.

Afterimages also occur in everyday perception. If there is a bright source of light in your visual periphery, you will likely build and afterimage even if the exposure is shorter than 10 second. You may see the contours of the peripheral objects in a form of an inverted image of the scene. Interestingly, afterimages can last long time, sometimes for minutes.

Figure 1. An example afterimage. Fixate on one point in this image for at least 10 seconds and then look at a white blank background. Which famous person do you see in the afterimage?

How do afterimages come about?

We don’t know. The oldest theory is that of resource depletion, whereby it is through that afterimages are a result of a fatigue of neurons. The idea is that if the nervous system is provoked with a lot of red color, it will get “tired” for red. Consequently, neurons responsible for red will become less able to respond to red stimuli. As red and green colors oppose each other—i.e., neurons for red compete with neurons for green and vice versa—such a fatigue of red neurons will help green neurons win the competition even in strong presence of red. As a result, we see green in the white background even if there should be no green seen (remember that white color is in fact a mixture of all the colors of the spectrum in about equal amounts).

Here is another possible view on how afterimages come about. What if in fact, we see green, not because the resources for red have been depleted, but because the system may infer that there is too much red coming in and for that reason it must not be that seen objects are red but rather that the source of light is red? Therefore, to see the actual color of the objects, not that of the light source, the system needs to undergo proper adaptations. This would be consistent with the hypothesis that neurons may be quickly adapting to re-route activation flow within our neural networks, as I explained previously.

Interestingly, the above hypothesis makes a great counter intuitive empirically testable prediction. If the hypothesis is right, then it should be possible to clean afterimages quickly once conditions are provided such that it becomes clear to the visual system that the existing adaptations are malfunctional—i.e., they result in incorrect perceptions of colors. In other words, afterimages should last long only when the visual system does not find good grounds to make changes; but as soon as grounds are there, the changes should take place rapidly.

This is what we tested. One way of giving the brain the “grounds” to erase afterimages was simply to change the “light source” conditions of the presented images—i.e, the filters. If we induced afterimages with green filters, then we can instantly remove those filters and investigate how rapidly afterimages fade away.

Measuring afterimages

Before we go into the details of how we made the brain change its “mind” about afterimages, let me explain how we measured afterimages. We needed to know how vivid the afterimages were and we needed to have this information available with high temporal precision. We wanted to know how the vividness changed every 200 ms. It is difficult for subjects to tell us about vividness of a complex image as the one in the example above. Inspecting such an image already takes longer than 200 ms. We needed something quick. So, we had to design uniform-distributed single-colored afterimages.

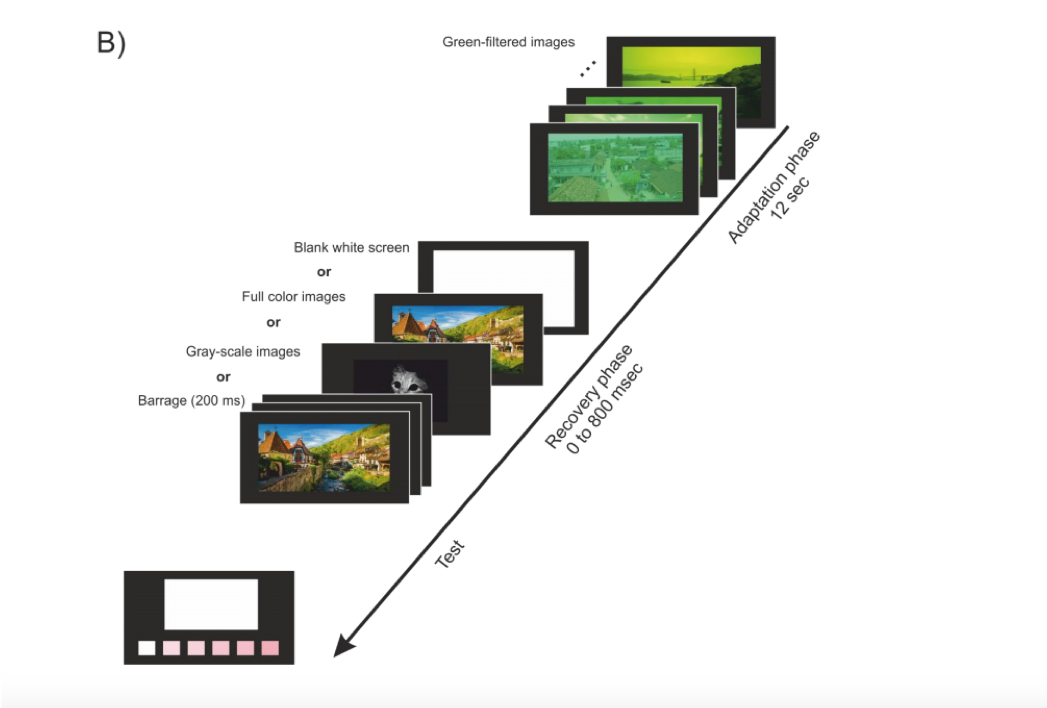

This was easy to create by having subjects view a series of images of natural scenes passed through an 80% green filter. The resulting afterimages appeared in a form of an evenly distributed pink haze. Test it by yourself with the image at the top of this post.

Figure 2. A photograph of a visual scene filtered through a green filter. We use similar images in our experiment in order to induce pink afterimages that were assessed after showing different images in between.

So, we asked subjects to simply report the amount of pink after first watching for 12 seconds various natural scenes filtered through green. And we asked that pinkness question after different time intervals: 200 ms, 400 ms, 600 ms up to 800 ms. We also had a 0 ms time interval, which was our reference point against which we compared all the subsequent changes in afterimage intensity. This allowed us to measure how the intensity of afterimages changed over time.

After having this set, it was then easy to subject the visual system to conditions in which there was not more ground for correcting for an excess of green color. We simply briefly presented photographs of natural scenes again but this time without a green filter—that is, in their full color. This situation clearly demanded readjusting any perceptual green-removal adaptations that may have taken place. The question was simply then: will the pink afterimages continue to last when subjects view only a blank white screen, but disappear quickly when viewing full colored images in between?

Afterimages faded quickly

This was indeed the case. There was, in addition, a little unexpected twist to our predictions.

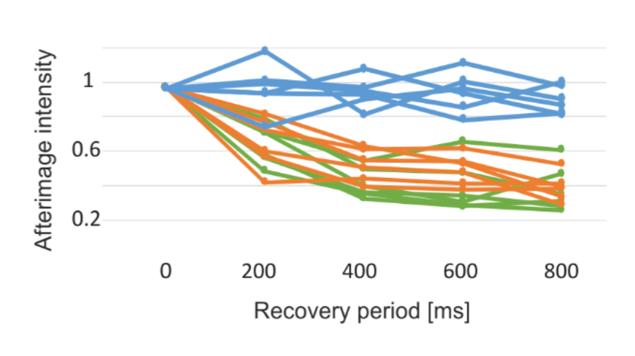

Let me first explain the result consistent with the idea of fast adaptations. In cases in which subjects only stared at the white background during the waiting phase, the intensity of pink afterimages did not change. Irrespective of whether this waiting period was 0 ms or 800 ms, the intensity remained constant. This meant that the afterimage was not going away—a result consistent with what all the previous literature; if unchallenged, afterimages prevail.

But then, if we challenged afterimages by presenting fully colored photographs, the afterimages faded. Even if we presented those fully colored images for only a brief period of time (200 ms), that was enough for the afterimages to lose more than 30% of their initial vividness. With longer exposure to full colored images they lost even more of the vividness.

Therefore, despite the 12 second build up time of the afterimage, a mere 200 ms was enough to erase a good chunk of that buildup.

Figure 3. The results from one of our experiments. Six subjects build afterimages by watching green-filtered images as illustrated in Figure 2. Each of them reported intensity of afterimages when viewing either a blank screen (blue lines) or viewing fully colored images (orange and green). The intensity of afterimages did not change if the subjects viewed blank screen, but reduced rapidly if they viewed fully colored images.

Figure 3. The results from one of our experiments. Six subjects build afterimages by watching green-filtered images as illustrated in Figure 2. Each of them reported intensity of afterimages when viewing either a blank screen (blue lines) or viewing fully colored images (orange and green). The intensity of afterimages did not change if the subjects viewed blank screen, but reduced rapidly if they viewed fully colored images.

Longer exposure times (800 ms) led to even further decay of afterimages, the maximum being reached at about 50% of clean up. Therefore, about one half of the original 12-second induced afterimages was cleared within less than a second.

This brings us to the mentioned unexpected twist. We did not manage to clear more than an average of 50% of afterimage. No matter what we did, we could not clear more. In Figure 3, the green traces represent the condition in which we presented not one, but a barrage of full-colored images of natural scene; we showed one natural scene after another with a pace of 200 ms. This means that by the recovery period of 800 ms, a subject would have seen four of such images—clearly, a pressure made on the visual system. This did not help. The 50% border of average recovery cold not be crossed.

We had to conclude that fast clear up of afterimages is not the whole story. There is also a slow, more robust part of afterimage that is hard to remove in a fast manner—or, at least we did not find a way to do it.

So, what does that mean?

We think that the fast part of removal of afterimages can be explained by fast adaptive mechanisms that have been described in the previous posts (here and here). Fast adaptive mechanisms may determine how we see the world. If there is too much green in the image, it may be too difficult to perceive red objects in such a green-dominated world. The fast adaptive mechanisms of the type that we see in these experiments may make rapid corrections such that we nevertheless keep seeing a red object. The same holds for all other kinds of distortions of the lighting in visual scenes. Part of an image may be too dark, too light, too yellow and so on. Such fast adaptations may adjust the visual system to be more capable of detecting relevant aspects in the scene—i.e., to be able to “see” better.

However, the fact that only 50% of afterimage gets cleaned so briefly may mean that also slow adaptive mechanisms play a role in perception and that the fast mechanisms are not capable of completely overriding what the slow adaptations do.

To download the manuscript of the original paper, click here.

This is a part on the blog series on mind and brain problems by Danko Nikolić. To see the entire series, click here.